SPSS defaults that can lead you astray when performing exploratory factor analysis

In many respects, the SPSS interface has been a terrific tool for students and researchers alike. The drop-down menu interface allows individuals to perform statistical analyses with just a few simple button pushes. Often embedded within different SPSS functions are various defaults, which makes running analyses even appear even simpler to the data analyst. Unfortunately, analysts often fail recognize the potential downsides of using these defaults. Overreliance on pre-programmed defaults result in poor judgments during interpretation of one's data. Moreover, too much reliance on these defaults can lead one to believe there is only one approach to analyzing their data and an overconfidence in one's interpretations of their data. If we do not think about the defaults (including their limitations) that are part of programmed packages, then we cannot change our data analytic approaches to reflect better practice. This posting addresses two defaults in SPSS that can easily lead you astray when performing exploratory factor analysis.

Default #1: Principal components analysis

The first default you should be wary of when performing exploratory factor analysis (EFA) is the extraction method being set to Principal components analysis (PCA).

Although PCA can be (and often is) used as a first step in exploratory factor analysis (EFA) as a tool for answering the 'number of factors question' (this is addressed below under Default #2), analysts often rely on this default during factor extraction [for examples using this SPSS default, see Stevens (2010) and Green and Salkind (2011)]. According to various authors (e.g., Fabrigar & Wegener, 2012; Russell, 2002), EFA and PCA are grounded in entirely different conceptual and statistical foundations. PCA breaks down the total variation in a set of measured variables into a set of orthogonal components, with the analyst's objective being to arrive at a set of components that does the best job of parsimoniously summarizing that variation. A matrix of observed correlations (unreduced correlation matrix) is the fundamental data on which PCA is carried out. Importantly, this procedure does not distinguish variation in the measured variables in terms of common variation and error variation. On the other hand, EFA is based on the common factor model which does distinguish between common variation among the measured variables and error variation. During EFA, the correlation matrix that is factored is a reduced correlation matrix, which contains initial estimates of communality (or variance that is shared between a given variable and the remaining variables). As described by Russell (2002), once the initial factoring has taken place, then new communality estimates are substituted into the principal diagonal and the model re-estimated. This process continues until the point in which the change in communalities across iterations reaches a minimum (or the number of programmed iterations has been exhausted). The final set of communalities represents an estimate of the 'proportion of variance accounted for by the common factors' (Gorsuch, 1983, p. 29).

Velicer and Jackson (1990) have argued that it makes no difference whether one uses PCA or a common factor analytic procedure during EFA. A central tenant of their argument was that PCA and EFA solutions are often similar and do not lead researchers to substantively different conclusions. This claim would have had greater relevance back in 1990 where (low) computing power would have encouraged the use of the simpler PCA approach to the more computationally demanding extraction methods associated with common factor analysis (see Fabrigar et al., 1999). A number of other authors (e.g., Fabrigar et al., 1999; Russell, 2002; Fabrigar, 2012, Widaman, 2018) have raised counterarguments to this issue and others raised by Velicer and Jackson (1990). First, Fabrigar et al. (1999) noted that increases in computational efficiency and speed has essentially obviated the need for choosing the less computationally demanding PCA when extracting factors. Second, Fabrigar and Wegner (2012) argue that although EFA and PCA often produce similar results, there are plenty of circumstances where those results may differ. Differences between the procedures are more likely to be observed in those cases where 'communalities are low (.40 or less) and there are a modest number of factors loading on each factor' - a circumstance that is 'common in social science research' (p. 33). Fabrigar and Wegener (2012) argued that fundamentally, the common factor model is a more realistic basis for factoring, as it recognizes the distinction between variation in the factored variables attributable to common factors and variation that is unique to those variables (i.e., measurement error). Finally, Widaman (2018) pointed out that since EFA is grounded in the common factor model, there is greater reason to expect that with 'several rounds of successful replication', this approach would do a better job than PCA in predicting how a model would perform using confirmatory factor analytic procedures.

[The above paragraph was just a sampling of part of the debate. See Fabrigar and Wegener (2012), Fabrigar et al. (1999), and Thompson (2004) for more thorough coverage of the debate!]

At this point, it is my hope that you can see the potential problems with relying on the default PCA approach to factor extraction in SPSS. A number of alternative approaches are provided in SPSS by clicking the 'down arrow' (see figure below). Two of the more common extraction approaches are Principal axis factoring (PAF; aka, iterated principal factoring) and Maximum likelihood estimation (MLE). Keep in mind that MLE offers a chi-square goodness of fit test to evaluate the fit of a model containing a certain number of factors (and indeed, it can be used as an alternative to the eigenvalue cutoff default in SPSS). However, MLE is grounded in the assumption of multivariate normality of your indicators. PAF does not assume multivariate normality.

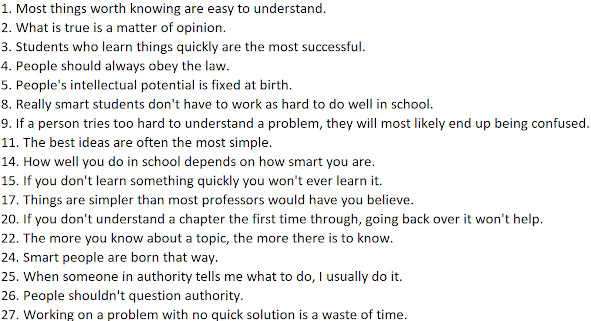

Example of selection of Principal axis factoring

Default #2: Eigenvalue cutoff default

An important task during EFA is to arrive at an estimate of the number of latent factors that may account for the intercorrelations among the variables being factors. Once you arrive at your estimate, the number you arrive at determines how many factors you ultimately extract in the final model. There are various methods by which one can investigate the question of 'how many factors to extract', and not all methods will necessarily agree (Hayton et al., 2004). Some methods may suggest more factors to extract, whereas other methods may suggest fewer factors, during a given factor analysis. Because methods can disagree in the number of factors to retain, it is problematic to base one's decisions on only one. As noted by Fabrigar and Wegener (2012), 'determining the appropriate number of common factors is a decision that is best addressed in a holistic fashion by considering the configuration of evidence presented by several of the better performing procedures' (p. 55).

SPSS has a preprogrammed default (i.e., the eigenvalue cutoff rule) that - if you are not careful - will shortcut the decision-making process by deciding for you the number of factors that should be retained. The eigenvalue cutoff rule is essentially this: The number of factors an analyst should retain is equal to the number of factors that have eigenvalues greater than 1.0 (Kaiser, 1960). The assumption behind this rule is that any factor that is retained should account for as much variation in a set of indicators as a single (standardized) variable [the variance of a standardized variable is 1.0]. What is important to realize about Kaiser's (1960) approach is that he applied this rule to eigenvalues derived from an unreduced correlation matrix (i.e., 'the number of latent roots greater than one of the observed correlation matrix'). In a nutshell, his application of the rule followed extraction using the principal components method. Application of this method to a reduced correlation matrix is not correct, although this also appears to be a fairly common problem (Russell, 2002).

Despite its large continued influence on factor analytic decisions, the eigenvalue cutoff rule has been roundly criticized within the literature. Fabrigar et al. (1999), Russell (2002), and Zwick and Velicer (1986) have all noted that the method often leads to overfactoring (and sometimes underfactoring). Fabrigar and Wegener (2012) argued that the use of the eigenvalue cutoff rule (as applied to the unreduced correlation matrix) is fundamentally incompatible with the common factor model (whereby factors are derived from a reduced correlation matrix). They also challenged the mechanical nature of the procedure and the seeming arbitrariness of deeming a factor with an eigenvalue of 1.01 as one that should be retained, but a factor with an eigenvalue of .99 not being worthy of retention.

In SPSS, the eigenvalue cutoff rule is correctly applied to a principal components solution (see the eigenvalues under the Initial Eigenvalues column below) to arrive at an estimate of the number of factors that should be extracted. However, rather than leaving the decision up to the analyst on whether to incorporate this method into their decision-making on how many factors to extract, SPSS forces a common factor solution consistent with the eigenvalue cutoff rule. The output below was generated where the extraction method was set for Principal Axis Factoring (PAF). With the default cutoff in place, a two-factor solution was forced - where the eigenvalues under the Extraction Sums of Squared Loadings are the eigenvalues from the final iterated PAF solution. [FYI, for this discussion, I did not request rotation.] The Communalities table contains initial communalities (in the form of squared multiple correlations that are substituted into the reduced correlation matrix) and those estimated with the final two-factor model.

One might reasonably suggest that all you have to do is to utilize various other methods (such as the scree plot, parallel analysis, performing model comparisons using MLE factor analysis, performing the MAP test) to decide on the number of factors to retain and then force the solution yourself using the 'Fixed number of factors' option (just below the default setting in SPSS). Ideally, this would be the strategy you would take. However, in my experience this is not something many novice factor analysts understand or recognize as a possibility (let alone a preferred approach to extracting factors). This seems to be made worse by the number of tutorials, textbooks, and SPSS guides that continue to promulgate the heavy use of the eigenvalue cutoff default in SPSS as a primary basis for deciding on number of factors (with or without also referencing the scree plot). Unfortunately, these are the same issues that were raised by Zwick and Velicer (1986) roughly 27 years ago when discussing the SPSS eigenvalue default in addition to 'explicit endorsement [of its use] by textbook authors' (p. 437).

All this is to say that it is very easy to succumb to the simplicity afforded by relying on the eigenvalue default in SPSS - a default based on a rule that has been shown to be quite problematic when not considered with other sources of information. Other programs - while also imperfect - provide options for determining the number of factors that acknowledge other methods for determining number of factors. For example, the Exploratory factor analysis interface associated with the Jamovi program (an open source program that can be downloaded here: link) and the JASP program (another open source program: link) allows the user to easily use the eigenvalue cutoff rule (if desired), the scree plot, to perform parallel analysis, and even test the fit of competing factor models (using MLE) to assist them in making a determination of the number of factors to retain. Generating a scree plot in SPSS is a fairly simple thing to do in SPSS by clicking the scree plot option, although it has its own limitations (e.g., the element of subjectivity in interpretation; Pituch & Stevens, 2016). However, to perform parallel analysis (particularly on a reduced correlation matrix) or the MAP test in SPSS, the only options I am aware of require using syntax files provided by Brian O'Connor. It is possible in SPSS to generate chi-square tests of model fit using MLE assuming different numbers of factors. However, this process is not particularly straightforward and the chi-square test can lead to overfactoring - particularly when working with large sample sizes where tests are overpowered.

For those of you who seek to know the alternatives I have discussed in this post, I will be tackling some of in upcoming posts! My next posting addresses the question, "Why effectively addressing the 'number of factors question' matters during EFA'. In that posting, I also discuss some of the alternatives to determining number of factors, any tendency for a given alternative lead to under- or over-factoring, and address which alternatives are more ideal.

References

Fabrigar, L. R., & Wegener, D. T. (2012). Exploratory factor analysis. Oxford University Press.

Fabrigar, L. R., Wegener, D. T., MacCallum, R. C., & Strahan, E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods, 4(3), 272–299.

Hayton, J. C., Allen, D. G., & Scarpello, V. (2004). Factor retention decisions in exploratory factor analysis: A tutorial on parallel analysis. Organizational Research Methods, 7(2), 191–205.

Kaiser, H. F. (1960). The application of electronic computers to factor analysis. Educational and Psychological Measurement, 20, 141–151.

Gorsuch, R. (1983). Factor analysis (2nd ed.). Hillsdale, NJ: Lawrence Erlbaum Associates.

Green, S. and Salkind, N. (2011) Using SPSS for windows and macintosh: Analyzing and understanding data. Prentice Hall, Boston.

Pituch, K.A. and Stevens, J.P. (2016) Applied multivariate statistics for the social sciences (6th ed.). New York: Routledge.

Russell, D. W. (2002). In search of underlying dimensions:The use (and abuse) of factor analysis in Personality and Social Psychology Bulletin . Personality and Social Psychology Bulletin, 28(12), 1629–1646.

Stevens, J.P. (2010). Applied multivariate statistics for the social sciences (5th ed.). New York: Routledge.

Thompson, B. (2004). Exploratory and confirmatory factor analysis: Understanding concepts and applications. American Psychological Association.

Velicer, W. F., & Jackson, D. N. (1990). Component analysis versus common factor analysis: Some issues in selecting an appropriate procedure. Multivariate Behavioral Research, 25(1), 1–28.

Widaman, K. F. (2018). On common factor and principal component representations of data: Implications for theory and for confirmatory replications. Structural Equation Modeling, 25(6), 829–847.

Zwick, W. R., & Velicer, W. F. (1986). Comparison of five rules for determining the number of components to retain. Psychological Bulletin, 99(3), 432–442.

Comments

Post a Comment